Is a Match Really a Match? A Primer on the Procedures and Validity of Firearm and Toolmark Identification

Federal Bureau of Investigation

Abstract

The science of firearm and toolmark identification has been a core element in forensic science since the early 20th century. Although the core principles remain the same, the current methodology uses validated standard operating procedures (SOPs) framed around a sound quality assurance system. In addition to reviewing the standard procedure the FBI Laboratory uses to examine and identify firearms and toolmarks, we discuss the scientific foundation for firearm and toolmark identification, the identification criterion for a “match,” and future research needs in the science.

What Does an Examiner Actually Do in a Firearm Examination? The General Examination Procedure for Bullets

The examination process typically begins when an examiner receives a suspect firearm, along with bullets (the projectiles) and spent cartridge cases recovered from a crime scene. The gun is test-fired in order to recover bullets and cartridge cases that serve as controls, or better, comparison samples. That is, these test-fired specimens will be compared at multiple levels with the specimens submitted from the crime scene.

To our knowledge, the vast majority of forensic laboratories in the United States and abroad have SOPs (technical protocols) that set forth in detail the proper examination procedures, and these procedures are highly similar across laboratories. This similarity certainly holds for bullets, for example, the examination of which we discuss below.

The initial bullet analysis (level one) involves the assessment of firearm class characteristics. These characteristics are permanent, measurable feature(s) of a tool or firearm that were predetermined before manufacture and that establish a restricted population or group source. The analysis of class characteristics (both for barrels and the transfer of their characteristics to bullets) creates the foundation for the firearm and toolmark examination procedure. If the comparison between an evidence bullet from a crime scene and a test-fired bullet from a suspect firearm reveals a convergence of discernable class characteristics, then the suspect firearm remains within the population (group) of “suspect” firearms and the analysis moves on to level two. In the FBI Laboratory, if even one type of class characteristic shows significant divergence, then the evidence bullet and suspect firearm exist as two separate populations (groups) and the bullet is eliminated from consideration as having been fired by the suspect firearm.

What class characteristics pertain to bullets? In addition to the diameter of the bullet, class characteristics stem directly from the nature of rifling, the longitudinal grooves that run the length of and “twist” around the inside of a barrel’s interior (the bore). Along with the lands, which simply are the raised portions of the bore adjacent to the grooves, the grooves are designed to impart a spin to a fired bullet so the bullet flies true, much as a quarterback imparts spin to a football when throwing a forward pass.

Generally, a firearm has three rifling characteristics: the number of land and groove impressions imparted to the bullet by the rifling in the bore, the widths of these land/groove impressions, and the direction of twist of these impressions. These characteristics are observed under a stereomicroscope. If, for example, bullet “A” displays four land/groove impressions and bullet “B” displays six land/groove impressions, then these two bullets were not fired from the same barrel. An incompatibility in any one of the three common rifling-characteristic measurements results in an exclusion.

If a level-one analysis fails to result in an elimination (i.e., an exclusion), then the next step in the examination procedure is a level-two analysis. This involves the comparison of the smaller microscopic features on the bullet. In this stage, a trained firearm-toolmark examiner using a specially designed comparison microscope determines if sufficient agreement of features (usually scratch marks or striations) exists to conclude that a bullet was fired from a particular firearm. Thus firearm-toolmark identification is—at its core—the examination and evaluation of both class characteristics and the much finer microscopic marks, which, in turn, often enable an examiner to render an opinion on the source of the marks.

In addition, firearm-toolmark examinations function within a quality assurance (QA) system that sets forth essential elements of a sound technical procedure, requires specific examination documentation, and audits the procedure and documentation to ensure reliability and reproducibility. Through proficiency testing, the QA system enables laboratory experts and management to further evaluate the procedures and accuracy of an examiner. Through document audits, the QA system enables laboratory experts to monitor the general procedure to determine if it reflects the scientific community’s current standard for accuracy and reliability.

Typical Examination Conclusions and Criteria

Generally speaking, in a firearm-toolmark comparison, an examiner reaches one of three conclusions: elimination, identification, or inconclusive.

As we have already observed, an elimination occurs at level one when a discrepancy exists in the class characteristics exhibited by two toolmarks. In an FBI Laboratory Report of Examination, an example of an elimination conclusion would comprise the following language, which is simple and direct:

Because of differences in class characteristics, the K1 firearm barrel has been eliminated as having fired the Q1 bullet. Firearms that have general rifling characteristics such as those exhibited on the Q1 bullet include . . . .

Proceeding to a level-two analysis, the identification criteria used at this level was published in the AFTE [Association of Firearm and Tool Mark Examiners] Criteria for Identification Committee report (hereafter “AFTE”) in the July 1992 issue of the AFTE Journal. The report states that “opinions of common origin . . . [can] be made when the unique surface contours of two toolmarks are in ‘sufficient agreement.’” The report then sets forth that

Agreement is significant when it exceeds the best agreement demonstrated between toolmarks known to have been produced by different tools and is consistent with the agreement demonstrated by toolmarks known to have been produced by the same tool. . . . (AFTE 1992) In an FBI Laboratory Report of Examination, an example of an identification conclusion would comprise the following language, also simple and direct: “The Q1 bullet was identified as having been fired from the barrel of the K1 firearm.”

When there is agreement in the class characteristics but insufficient agreement in the fine microscopic marks, an inconclusive response is appropriate. There are many reasons why tool or test marks that match in class characteristics do not reproduce the microscopic marks that an examiner uses to come to an identification conclusion—it may be the wrong tool or there may have been subsequent wear, abuse, corrosion, tampering, etc. Thus, in these instances (with rare exceptions stemming from particular circumstances that could possibly justify an elimination), an examiner states that he or she does not know if a known tool was used to produce a questioned toolmark. In an FBI Laboratory Report of Examination, an example of an inconclusive result would comprise the following language:

Specimen Q1 is a bullet that has been fired from a barrel rifled with six (6) grooves, right twist, like that of the K1 pistol; however, from microscopic examination, no definitive conclusion could be reached as to whether the Q1 bullet was fired from the barrel of the K1 pistol.

Although the agreement in class characteristics indicates a restricted group source and often is highly probative information, currently it is not possible to determine quantitatively the probative value of a level-one, class-only match. Firearm production and ownership figures are constantly changing and difficult to obtain. Thus an inconclusive conclusion is usually the only reasonable way to verbalize this situation. However, this also means that the strength of the results is essentially understated in these circumstances, particularly for bullet examinations. Firearm examiners know full well that the firearm that fired a bullet with “XYZ” rifling characteristics present will be the same make and model of only a small fraction of all of the firearms presently in the geographical area of the crime. In effect, then, an inconclusive result implies neutrality when, in actuality, matching rifling characteristics tend to include the submitted firearm. The final outcome is that reports of examinations sometimes unavoidably are biased in favor of a defendant, a situation that, while not optimal, is tolerable, given the governing principles of the U.S. legal system.

The Scientific Foundation of Firearm and Toolmark Identification

Two general propositions constitute the scientific foundation for the core of the firearm and toolmark identification discipline. Taking the second proposition first, it provides a brief explanation for why firearm and toolmark examiners are able to do what they do:

Most manufacturing processes involve the transfer of rapidly changing or random marks onto work pieces such as barrel bores, breechfaces, firing pins, screwdriver blades, and the working surfaces of other common tools. This is caused principally by the phenomena of tool wear and chip formation, or by electrical/chemical erosion. Microscopic marks on tools may then continue to change from further wear, corrosion, or abuse. (Scientific Working Group for Firearms and Toolmarks [SWGGUN] 2007) Firearm-toolmark examiners are thus able, with training, to distinguish between two classes of items—those marked by the same tool and those marked by different tools. Surfaces marked by the same tool (whether the tool is a screwdriver or barrel bore) tend to have strong similarities/ correspondence in their fine features, whereas surfaces marked by different tools show far fewer similarities and less correspondence when viewed under a comparison microscope using typical magnifications of 10X–50X.

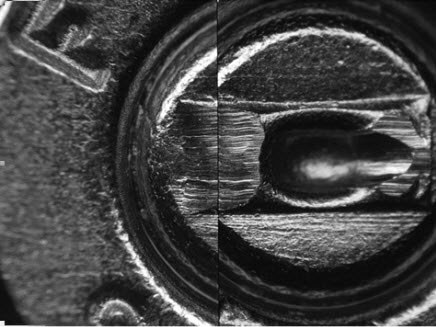

Figures 1, 2, and 3 depict two test-fired cartridges viewed under a comparison microscope. In Figures 1 and 2, the cartridges were fired from the same firearm and show strong similarities between marks. By contrast, Figure 3 shows two cartridges fired from different firearms. These cartridges display no significant correspondence between marks.

|

|

|

The fundamental method for reaching conclusions is no different in principle for toolmark analysis than for any kind of comparative analysis—for example, for radiologists or dentists examining X-ray film. While in training, firearm-toolmark examiners compare many items, some known to have been marked by the same tool and others known to have been marked by different tools. These two classes of items tend to be highly distinguishable, and trainees quickly learn this, although the training is clearly necessary nonetheless. In the same vein, to learn to reliably recognize tooth decay on X-ray film, a dentist-in-training must observe numerous films of both healthy and decayed teeth.

Unlike the small ridges on fingers, a tool will change over time from wear and thus leave different marks on, for example, bullets. For bullets fired through a barrel in sequential fashion, bullet number 1 usually will display significant microscopic correspondence to bullet number 10, 100, or even number 500 or 1000 but, depending on the firearm and caliber, may not achieve this for bullet number 50,000. Impressed marks are more persistent by their nature. Given relatively clean parts, firing pin and breechface impressions at levels of microscopic significance can persist for many thousands of firings (Bonfanti and De Kinder 1999). However, the lack of infinite persistence does not diminish the reliability of examiner conclusions or the validity of the examination technique in terms of the propositional claims. As microscopic similarities/correspondence diminish across the firing sequence, an inconclusive result becomes increasingly likely.

It should be mentioned, however, that the decision criteria used by trained firearm-toolmark examiners, while objectively stated (see the above section on this topic), are subjective in practice. However, subjectivity is by no means tantamount to unscientific or unreliable. Elements of subjectivity are present in all sciences, more or less, especially in applications of a theory or technique.

Even so, microscopic comparisons are clearly observer-dependent. Because the similarities and dissimilarities between evidence specimens lie along a nonlinear continuum and require an observer to “draw a line” and make a judgment call, we firmly believe that the human examiner is integral to the science and the examination process. The human examiner (or a machine) cannot be completely disentangled from the science and viewed in isolation apart from the underlying physical properties of evidence specimens and therefore must be included in thoroughgoing tests of the validity of the science.

Thus the relevant question for the firearm/toolmarks science and the courts is not, Are toolmarks unique? At some level all physical objects are unique. Rather, the relevant question is, Can a trained human or machine reliably distinguish between toolmarks made by one tool versus toolmarks made by other tools? These considerations lead to the discipline’s first basic proposition, a directly testable claim that includes examiners as integral to the science: Class and microscopic marks imparted to objects by different tools will rarely if ever display similarities/correspondence sufficient to lead a qualified firearm-toolmark examiner to conclude that the objects were marked by the same tool. That is, a qualified examiner will rarely if ever commit a false-positive error (misidentification) (Bunch 2008).

This is to assert, for example, that an examiner will rarely if ever conclude from a microscopic comparison that a cartridge case fired from unknown gun A was fired in known gun B. This is a simple, robust, and readily testable claim. An analogous claim concerns eliminations, that is, when objects were marked by different tools. In past decades, three types of tests have been relevant to this claim: presumptive validity checks, comprehensive validity tests, and proficiency tests (the latter two are often termed “black box” tests).

The first test usually involves examiners who investigate a new manufacturing technique to check for indications of subclass marking, that is, to see if a tool imparts marks (called subclass marks) that persist in highly similar form and that could possibly result in examiners’ committing false-positive errors because of this similarity. Most of the time the answer is no, but occasionally, it is yes, and if so, when the results are published, examiners are thereby informed to be careful about such circumstances (Nichols 1997; Nichols 2003).

The first type of black-box test is a comprehensive validity test. For these tests, errors from virtually all possible sources would be incorporated into the final results, though every effort is made to eliminate quality-assurance errors. These errors directly test the validity of the discipline and the proposition that trained firearm-toolmark examiners can distinguish between marks made by either the same or different firearms or tools. Several tests of this type have been conducted in the Firearms-Toolmarks Unit (FTU) of the FBI Laboratory to maintain strict controls (Bunch and Murphy 2003; Defrance and Van Arsdale 2003; Giroux 2008; Murphy n.d.; Orench 2005; Smith 2005). In comprehensive validity tests, qualified examiners conduct numerous microscopic examinations and provide their conclusions to the test provider. The important elements of these tests (Bunch and Murphy 2003) are:

- Strict anonymity. If an error is committed, a means must not exist to trace the error back to the examiner who committed it. Why? There must be no incentive for test examiners, acting in their perceived career interest, to “play it safe” by providing an inconclusive result. Instead, the tests should mimic actual casework as much as possible, except where even greater difficulty in the examinations is desired.

- Blind tests. The tests are blind, insofar as the test maker/provider does not know who has what test packet, and the test participants have no possible means to learn the answers, either from the test providers or from discussions with other examiners. However, a totally blind test, wherein the test examiners are unaware they are taking a test, is virtually impossible to create and administer in practice.

- Mandatory returns. Test examiners cannot “opt out” once they begin. They must provide complete responses.

- No unambiguous responses. The test should have no conclusions in narrative form, which have the potential for ambiguity. Clear answer sheets are required.

- Qualified participants. Only fully qualified examiners will participate, as determined by an examiner’s respective laboratory. (Bunch and Murphy 2003)

The second type of black-box test is a proficiency test. These are quality-assurance devices designed to test an examiner’s competence or the competence of a laboratory system, not the validity of a theory or technique. When used for validity and error-rate purposes, these tests have many drawbacks. These include (1) anyone who pays the fee may participate in these tests, including attorneys and examiner trainees; (2) they are not as blind as validity tests; (3) participants are not anonymous; and (4) returns are not mandatory (Peterson and Markham 1995). Finally, proficiency tests are used for self-critical evaluation. Once an error or deficiency is identified, corrective action is undertaken. Despite these drawbacks, thousands of these tests have been administered across the United States, and they do provide some information related to validity that is superior to no information at all.

For firearm-toolmark identification, the overall results of the validity and proficiency tests are encouraging. Full-scale validity-test errors for tests conducted within and outside the FBI Laboratory on bullets and cartridge cases have so far not been observed (that is, the error rate equals zero). The combined results of a series of five intra-FTU validity tests (which include two tests on toolmarks) showed a false-positive rate of 0.1 percent and a false-negative rate of 1.8 percent (Bunch and Murphy 2003; Defrance and Van Arsdale 2003; Giroux 2008; Murphy n.d.; Smith 2005). Another intra-FTU test concerning fracture matching produced no false-positive or false-negative errors (Orench 2005). Proficiency-test results, unsurprisingly, show slightly higher error rates, with an overall average in the range of 1 to 3 percent (Peterson and Markham 1995).

To illustrate one of the key drawbacks of proficiency-test error rates—namely, the unknown qualifications of participants—the results of a particular test in 1992 can be examined. Atypically, this test allowed participants to indicate whether they were trainees, thus allowing the results to be interpreted in light of the experience level of the examiner. Of the 511 comparison opinions issued by the 130 responders in this test, six were incorrect (five false exclusions and one false positive). Four of these six errors were made by the 27 trainees, whereas the remaining two errors were made by the 103 experienced responders (Collaborative Testing Services 1992). This example is at least suggestive that a significant percentage of errors in proficiency tests are made by as-yet-unqualified examiners.

It also must be stressed here that error rates alone cannot be converted directly into a probability estimate, for example, that a bullet was or was not fired from a particular barrel. Leaving aside for a moment individual examiner abilities, case difficulty, validity-/proficiency-test design, etc., a sound probability estimate of this kind is also contingent on the base rate or prior odds that a bullet submitted to a laboratory was indeed fired from the submitted firearm, as well as the general examination probability of an inconclusive finding. This topic lies beyond the scope of this paper, but suffice it to say that an error rate of 1 percent for a particular examination—a rate stemming from validity-/proficiency-test data—does not warrant the assertion that a 1 percent chance exists that an examiner is wrong when concluding identity.

Clearly, limitations exist with error-rate data of this kind. Strong efforts usually are made to correct errors stemming from improper human action or inference. As we have observed, proficiency tests have their own issues. Aggregate data do not speak directly to individual error rates, which, owing to small sample size and learning/self-correction, are difficult if not impossible to determine reliably. This is especially true for a “current” examiner error rate. Obviously, errors can and do occur, but when examining the data as a whole, it becomes clear that conclusions from firearm and toolmark comparisons conducted by qualified examiners are highly reliable.

Research Needs for the Discipline

Research efforts in the field of firearm-toolmark identification can be divided into two broad categories: those that incorporate examiner performance and those that do not. To date, the former category has consisted primarily of blind validity tests with control examiners or black-box tests (see Scientific Foundation section). Given the endless variety of tools and firearms and the occasional development of new manufacturing methods, this effort, in principle, could be expanded considerably. Having an entity outside the forensic laboratory prepare and administer each test could maximize objectivity, allow for testing in multiple laboratories, and minimize the workload on laboratory examiners. In addition to providing useful information about errors, validity-test results can be used to evaluate current identification and elimination standards, while helping to identify possible problems in the field (such as the discovery of significant subclass marks produced by a specific make and model tool).

The second area of research is casework-focused and involves machine-based analyses of toolmarked surfaces. To date, the focus of this research has involved computerized systems that analyze the similarity of surfaces marked by the same tool and those marked by different tools (Bachrach 2002). The results of these experiments have shown conclusively that a machine can detect significant differences between known matches and known nonmatches. With continued research and development, machines such as these could eventually be used in laboratories for independent confirmations of examiners’ conclusions in casework and, ultimately, may replace human judgment in the comparison process. Because the working surfaces of different tools change at unpredictable rates, “ID machines” (or the identification cut-off values inherent to these systems) would need to be fine-tuned to reach acceptable trade-offs among false positives and negatives and sensitivity and specificity. This desired balance will undoubtedly be the subject of some discussion and may require specific research to justify whatever balance is ultimately selected. Clearly, machines will not find wide acceptance unless and until the overall quality of their output can be demonstrated to match or exceed those of human examiners.

Conclusion

In sum, we firmly believe that the firearm-toolmark discipline is both highly valuable and highly reliable in its traditional methods. However, additional research is highly beneficial and, depending on its purpose and design, would tend to better address potential error, identify manufacturing methods that are suspect for comparison purposes, and further develop machine systems and perhaps probabilistic models.

Acknowledgment

This is publication number 09-16 of the Laboratory Division of the Federal Bureau of Investigation. Names of commercial manufacturers are provided for identification purposes only, and inclusion does not imply endorsement by the FBI.

References

-

AFTE Criteria for Identification Committee. Theory of identification, range of striae comparison reports and modified glossary definitions—An AFTE Criteria for Identification Committee report, AFTE Journal (1992) 24(3):336–340.

Bachrach, B. Development of a 3D-based automated firearms evidence comparison system, Journal of Forensic Sciences (2002) 47:1253–1264.

Bonfanti, M. S. and De Kinder, J. The influence of the use of firearms on their characteristic marks, AFTE Journal (1999) 31:318–323.

Bunch, S. G. Statement of Stephen G. Bunch. Affidavit [May 28, 2008] for Frye challenge in United States v. Worsley, 2003 FEL 6856 (D.C. Superior Ct. 2008). Opposing and Supportive Viewpoints to Firearm and Toolmark Identification.

Bunch, S. G. and Murphy, D. P. A comprehensive validity study for the forensic examination of cartridge cases, AFTE Journal (2003) 35:201–203.

Collaborative Testing Services (CTS). Firearms Analysis Report No. 92-4. Sterling, Virginia, 1992.

DeFrance, C. S. and Van Arsdale, M. D. Validation study of electrochemical rifling, AFTE Journal (2003) 35:35–37.

Giroux, B. N. Empirical and validation study of consecutively manufactured screwdrivers. Submitted for publication, 2008.

Murphy, D. P. Unpublished report. Drill Bit Validation Study. FBI Laboratory, Firearms-Toolmarks Unit, Quantico, Virginia, n.d.

Nichols, R. G. Firearm and toolmark identification criteria: A review of the literature, Journal of Forensic Sciences (1997) 42:466–474.

Nichols, R. G. Firearm and toolmark identification criteria: A review of the literature, part II, Journal of Forensic Sciences (2003) 48:318–327.

Orench, J. A. A validation study of fracture matching metal specimens failed in tension, AFTE Journal (2005) 37:142–149.

Peterson, J. L. and Markham, P. N. Crime laboratory proficiency testing results, 1978–1991, II: Resolving questions of common origin, Journal of Forensic Sciences (1995) 40:1009–1029.

Scientific Working Group for Firearms and Toolmarks (SWGGUN), Firearm & toolmark identification (PowerPoint). (Revised October 23, 2007). Admissibility Resource Kit. Appendix I—Visual Aids.

Smith, E. Cartridge case and bullet comparison validation study with firearms submitted in casework, AFTE Journal (2005) 36:130–135.

About the Authors

-

Stephen G. Bunch,

Chief,

Firearms-Toolmarks Unit,

FBI Laboratory,

Quantico, Virginia

Erich D. Smith, Physical Scientist, Firearms-Toolmarks Unit, FBI Laboratory, Quantico, Virginia

Brandon N. Giroux, Physical Scientist, Firearms-Toolmarks Unit, FBI Laboratory, Quantico, Virginia

Douglas P. Murphy, Physical Scientist, Firearms-Toolmarks Unit, FBI Laboratory, Quantico, Virginia

The Crime Scene Investigator Network gratefully acknowledges the Federal Bureau of Investigation for allowing us to reproduce this article.